Search for Articles

Special Issue Information Sciences

Fundamental Study on Detection of Dangerous Objects on the Road Surface Leading to Motorcycle Accidents Using a 360-Degree Camera

Journal Of Digital Life.2025, 5,S1;

Received:November 22, 2024 Accepted:January 18, 2025 Published:March 26, 2025

- Haruka Inoue

- Faculty of Information Technology and Social Sciences, Osaka University of Economics

- Yuma Nakasuji

- Faculty of Information Technology and Social Sciences, Osaka University of Economics

Correspondence: h.inoue@osaka-ue.ac.jp

This article has a correction. Please see:

Abstract

In recent years, the number of fatalities in traffic accidents involving motorcyclists has remained almost unchanged, with single-vehicle accidents accounting for 37.2% of all accidents by accident type in the past five years. In the development of overturn prevention devices for motorcycles, problems remain in post-mounting of the device as well as its downsizing. On the other hand, an existing study using deep learning has proposed a method for detecting dangerous objects on the road surface leading motorcycles to overturn, though this method still needs verification under different conditions. In this study, we apply a method for detecting dangerous objects on the road surface from video images using YOLO to two types of 360-degree cameras and verify that this method is versatile under different conditions.

1. Introduction

In recent years, the number of fatalities in traffic accidents involving motorcyclists has remained almost unchanged, and although the Metropolitan Police Department has been conducting motorcycle safety classes, the number of fatalities increased for all ages in 2023. Single-vehicle accidents accounted for 37.2% of all accidents by accident type in the past five years from 2018 to 2022 (Tokyo Metropolitan Police Department, 2024). The occurrence situations of traffic accidents resulting in injury or death in 2024 show that the number of fatalities from motorcycle accidents is about twice as high as that of automobiles accidents. Although ADAS (Nikkei xTECH, 2024), an advanced safety technology for motorcycles has been developed, its diffusion is slower than that for automobiles. Therefore, riders are required to follow the traffic rules and instantly predict danger. An existing study on the development of an overturn prevention device for motorcycles using the gyro effect suggests a need for downsizing the device (Senoo et al., 2017). A study on detecting dangerous objects as well as detection of dangerous objects that may cause motorcycles to overturn using deep learning (Inoue et al., 2023) as well as a study on detecting dangers leading to motorcycle accidents using 360-degree cameras (Inoue et al., 2024) show the difficult issue of verification under different conditions. In this study, we apply a method to detect dangerous objects on the road surface from video images using YOLO to two types of 360-degree cameras (hereinafter referred to as “Dangerous object detection method”) and verify that this method is versatile. In this study, as with the existing studies, fallen leaves, gravel, manholes, bumps, and wet road surfaces are considered as dangerous objects on the road surface.

2. Method

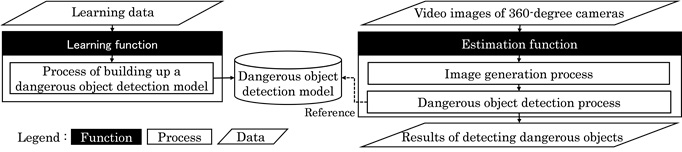

Fig. 1 shows the process flow of the dangerous object detection method. The dangerous object detection method consists of a learning function and an estimation function. The input data for the learning function is the learning data, and the output data is the dangerous object detection model. The input data for the estimation function are video images taken by the 360-degree camera while riding a motorcycle, and the output data are the results of dangerous object detection.

The learning function builds up a learning model to detect dangerous objects on the road surface that may cause a motorcycle to overturn. Specifically, as shown in Table 1, the model to detect fallen leaves, gravel, manholes, bumps, and wet road surface as dangerous objects from video images (hereinafter referred to as “dangerous object detection model”) by annotating dangerous objects on the road surface and learning them using YOLOv5.

The estimation function is used to detect dangerous objects on the road surface from video images captured by the 360-degree camera. In the image generation process, the THETA+ application is used to convert the display format to flat, and crop to 1.91:1, and cut out the video image at 3 fps. The dangerous object detection process is used to detect dangerous objects on the road surface using the dangerous object detection model built up by the learning function.

Table 1. Example of annotation

3. Demonstration Experiment

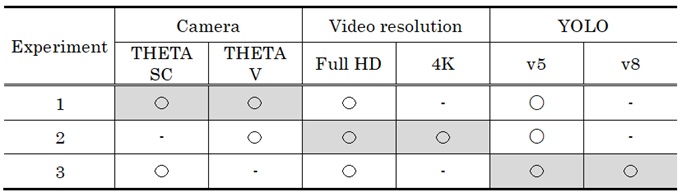

In this experiment, we confirm the versatility of the proposed method by applying it to the video images shot by using 360-degree cameras under different conditions regarding types of cameras, resolution, and the versions of YOLO. The experimental conditions for Experiments 1 through 3 are shown in Table 2.

3.1. Method of the experiment

First, this study targets two types of cameras: THETA SC and THETA V, both of which are products of RICHO. In this experiment, each of the 360-degree cameras are installed at the front part the motorcycle (Fig. 2). A male person in his 20s rides the motorcycle along the same section of road in the urban area of Wakayama Prefecture multiple times with the same speed as much as possible. Then, the results of detecting dangerous objects detected by applying the dangerous object detection method to the respective video images are compared with the manually generated correct-answer data, to make evaluation based on precision, recall, and F-measure. The learning model was built up using different images from the data used for the evaluation. For the learning data, the data shot by THETA SC on March 20, 21, July 10, and 12, 2024 were used. 3,103 images were used for the dangerous object detection model. On the other hand, the evaluation data were those shot by THETA SC and THETA V on March 22 and July 11, 2024.

Table 2. Experimental conditions

3.2. Experiment 1: Verification of versatility for different types of cameras

In Experiment 1, The dangerous object detection method is applied to the video images shot using THETA SC and THETA V to verify its versatility for different types of cameras. The ISO sensitivity differs between the two, ranging from 100 to 1,600 with THETA SC and from 64 to 6,400 with THETA V. The video resolution is full HD for both.

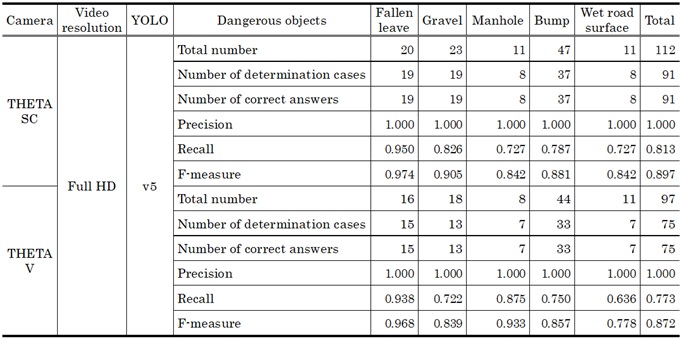

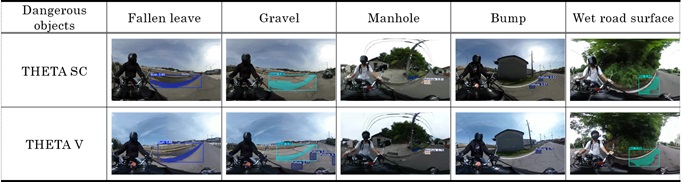

Table 3 shows the experimental results of Experiment 1, and Table 4 shows an example of the results of detecting dangerous objects. The overall F-measure is 0.917 for THETA SC and 0.839 for THETA V respectively, indicating that the dangerous object detection method is capable of detecting dangerous objects on the road surface correctly on the whole. Comparing the F-measure by camera type, the difference between THETA SC and THETA V was 0.078, which is not much difference. However, as the results of detecting bumps and wet road surface shown in Table 3, there were cases where only one of the 360-degree cameras was able to detect dangerous objects, even when the images were taken at the same point. Besides, comparing the F-measure by dangerous object type, the F-measure was lower for bumps and wet road surfaces than for fallen leaves, gravel, and manholes. Focusing on the result of detecting dangerous objects, there was a tendency of omission of detection for small bumps or wet road surfaces covered with shadows. We will increase the number of learning data under various environments and change the version of YOLO in order to advance the system.

Table 3. Experimental result of Experiment 1

Table 4. Result of detecting dangerous objects with different cameras

3.3. Experiment 2: Verification of versatility for different resolutions

In Experiment 2, the dangerous object detection method is applied to the video images shot using THETA V with resolutions of full HD and 4K to verify its versatility for different resolutions. It should be noted that as THETA SC only has a resolution of full HD, it was excluded from the experiment.

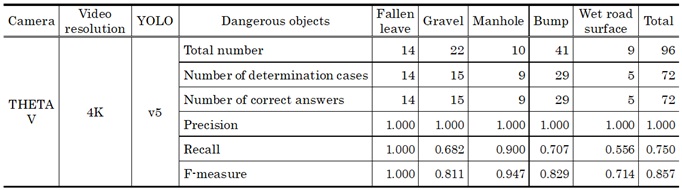

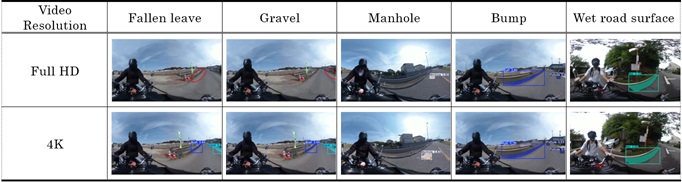

Table 5 shows the experimental results of Experiment 2, and Table 6 shows an example of the results of detecting dangerous objects. The overall F-measure is 0.872 for full HD, and 0.857 for 4K, indicating that the dangerous object detection method is capable of detecting dangerous objects on the road surface correctly for the most part. Comparing the F-measure by the resolutions, the difference between full HD and 4K was 0.015, which was not a large difference. However, the result of detection shows the tendency that 4K is capable of detecting dangerous objects on the road surface located at a remote distance compared with full HD.

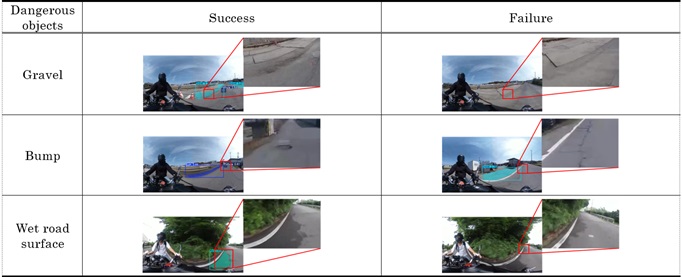

Comparison of F-measure by types of dangerous objects shows that it is high for fallen leaves and manholes in the case of 4K, just as the same with full HD. Focusing on the dangerous object for which the F-measure for 4K is lower than that for full HD, examples of success and failure of gravel, bumps, and wet road surfaces are shown in Table 7. The result of detection indicates the tendency of failing to detect light-colored gravel, bumps where it was difficult to visually check the unevenness of the road surface, and the road surface where the wetted area is small. In the future, we plan to advance the method by increasing the learning data under diverse environments and by changing the version of YOLO.

Table 5. Experimental result of Experiment 2

Table 6. Result of detecting dangerous objects with different resolutions

3.4. Experiment 3: Verification of versatility for different versions of YOLO

In Experiment 2, the dangerous object detection model is generated for two types of versions: YOLOv5 and YOLOv8 to verify the versatility of the method in the case of different versions of YOLO.

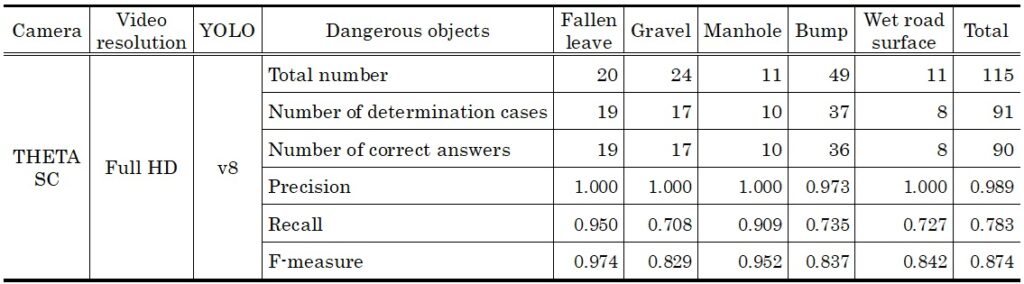

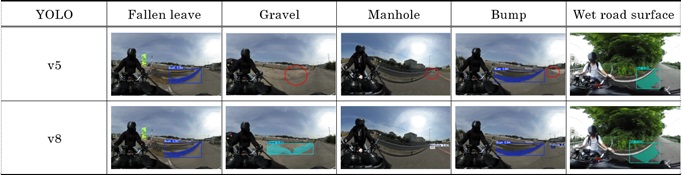

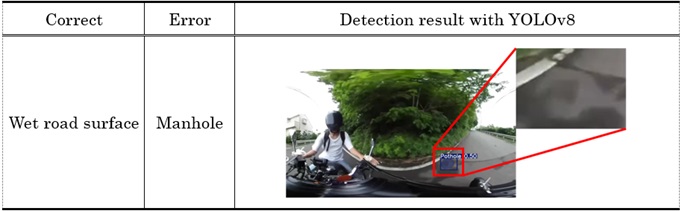

Table 8 shows the experimental result of Experiment 3, and Table 9 shows an example of the results of detecting dangerous objects. The overall F-measure is 0.897 for YOLOv5 and 0.874 for YOLOv8, which indicate that the dangerous object detection method is capable of dangerous objects on the road surface for the most part. The detection result indicates the tendency that YOLOv8 is capable of detect dangerous objects on the road surface located at a distance better than YOLOv5. However, YOLOv8 sometimes detected a wet road surface erroneously as a manhole. The detection result shown in Table 10 suggests that its cause can be considered that the pattern of the wet road surface is similar to the manhole. In addition, focusing on the gravel for which the F-measure with YOLOv8 is lower than that with YOLOv5, it is made clear that the recall ratio of the gravel is low with YOLOv8, and that there are more failures in detection than other dangerous objects. Just as in Experience 2, its cause can be considered the difference in color of the gravel. Since this occurs due to differences in weather conditions as shown in Table 11, we plan to advance the method by increase the learning data under diverse environments in the future.

The results of experiments 1 to 3 indicate that there is little difference among the overall F-measure when applying the dangerous object detection method to the video images shot with 360-degree cameras under different conditions as to the types of cameras, resolutions, and YOLO versions, which proves the versatility of the proposed dangerous object detection method. Furthermore, assuming its utilization in the actual sites on different dates or under different weather conditions, the fact that the detection accuracy was equal to or higher than 0.85 in different dates and under different weather conditions in this study indicates that this dangerous object detection method is useful.

Table 7. Examples of success and failure for the results of detecting dangerous objects

Table 8. Experimental result of Experiment 3

Table 9. Result of detecting dangerous objects with different versions of YOLO

Table 10. Example of erroneous detection of the wet road surface

Table 11. Shot images of dangerous objects under different weather conditions

4. Conclusion

In this study, we verified the versatility of the method to detect dangerous objects on the road surfaces including fallen leaves, gravel, manholes, bumps, and wet road surfaces. In the demonstration experiments, we applied the dangerous object detection method to the video images shot under respective conditions for the 360-degree camera (THETA SC and THETA V), resolutions (full HD and 4K), and YOLO versions (YOLOv5 and YOLOv8) to evaluate the precision ratio, recall ratio, and F-measure. As a result of demonstration experiments, it was found that there is little difference in the F-measure under different conditions such as types of cameras, resolution, and versions of YOLO, and consequently it is capable of detecting dangerous objects on the roads for the most part.

In the future, we plan to improve its accuracy by increasing the learning data under a variety of environments to deal with a problem of detection errors through repeated verification under different environments. We also aim to decrease the number of motorcycle accidents by detecting the factors leading to motorcycle accidents with additional information about the drivers.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

Inoue, H., et al. (2023). Research for detecting dangerous objects leading to overturning of motorcycles using deep learning. Proceedings of the 85th National Convention of IPSJ, 85(1), 965–966.

Inoue, H., et al. (2024). Research for Detecting Dangerous Leading to Motorcycle Accident Using 360 Degree Camera. Proceedings of the 86th National Convention of IPSJ, 86(1), 2-351–2-352.

Nikkei xTECH, Hitachi Astemos aims to commercialize ADAS for motorcycles by 2028. (2024).

Senoo, D., et al. (2017). Development of motor-and-bicycle anti roll-down system. The Proceedings of the Transportation and Logistics Conference, 26. https://doi.org/10.1299/jsmetld.2017.26.1104

Tokyo Metropolitan Police Department. (2024). Motorcycle traffic fatality statistics (through 2023). https://www.keishicho.metro.tokyo.lg.jp/kotsu/jikoboshi/nirinsha/2rin_jiko.html

Relevant Articles

-

Accuracy of peripheral oxygen saturation (SpO₂) at rest determined by a smart ring: A Study in Controlled Hypoxic Environments

by Yohei Takai - 2025,6

VIEW -

An attempt to realize digital transformation in local governments by utilizing the IT skills of information science students

by Edmund Soji Otabe - 2025,4

VIEW -

Wildlife Approach Detection Using a Custom-Built Multimodal IoT Camera System with Environmental Sound Analysis

by Katsunori Oyama - 2025,S2

VIEW -

A Study on the Development of a Traffic Volume Counting Method by Vehicle Type and Direction Using Deep Learning

by Ryuichi Imai - 2025,S4

VIEW